# Week 2 - Regression and classification

Last edited: 2024-01-17

Regression history

The name comes from regression to the mean , this was the technique they used to first talk about it - then the word regression for the technique stuck.

Polynomial Regression

Once we have an objective function we can use training data to fit the parameters $c_{p}$.

Note that linear regression is polynomial regression but where $k=1$.

# Polynomial regression using MSE

Calculate polynomial regression coefficients for MSE

# Picking a degree

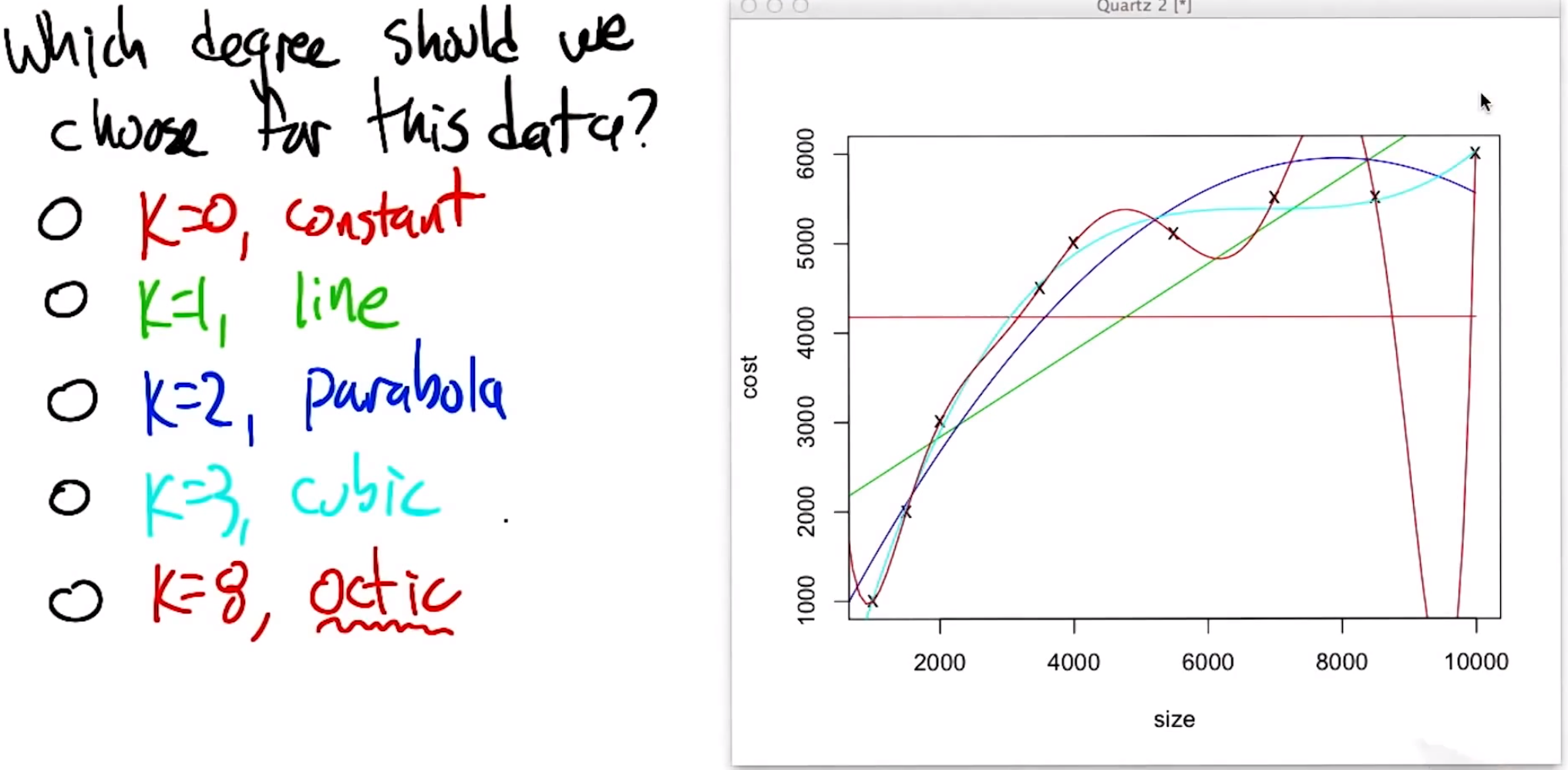

Below is an example of polynomial regression done on different degree polynomials.

As we increase the degree the fitting polynomial, the fit to the points we are training on gets better. However, at a point the utility of the curve outside of these points gets less.

This is easy to see by eye but how can we computationally infer this?

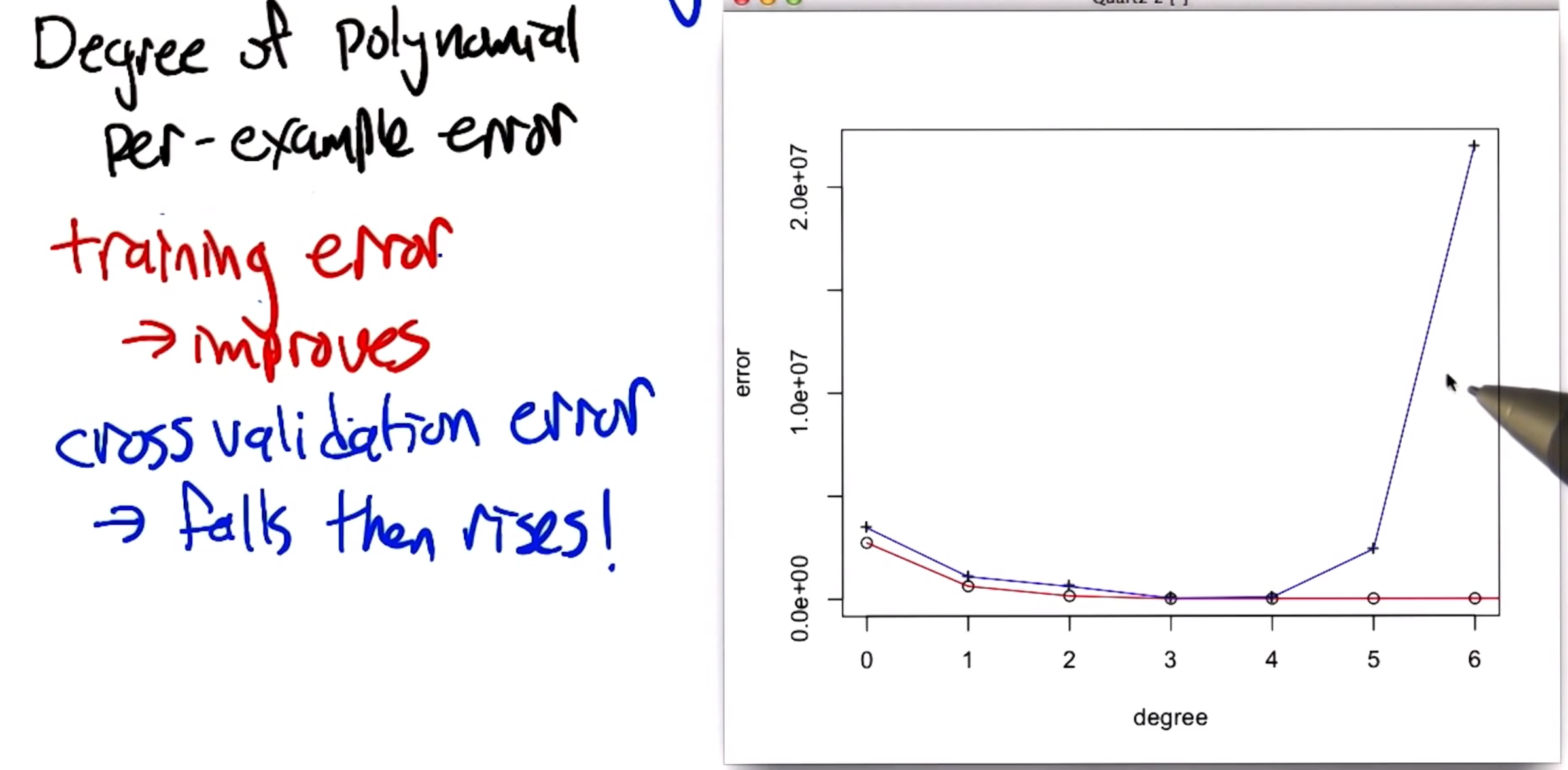

# Cross validation

When using cross validation to assess the accuracy of our fit in the example before, you can see it agrees with our intuition. Whilst the high order approximations are a closer fit for the training data , they are a worse fit for the test data . Therefore we could use cross validation to pick the best polynomial without breaking the integrity of the testing data .

Generally you need to find the right sweet spot between underfitting and overfitting by varying the degree of the polynomial.